Falsely-Flagged Family Photos Result in Criminal Investigations

Automated content scanning to protect children from online harms causes additional harm

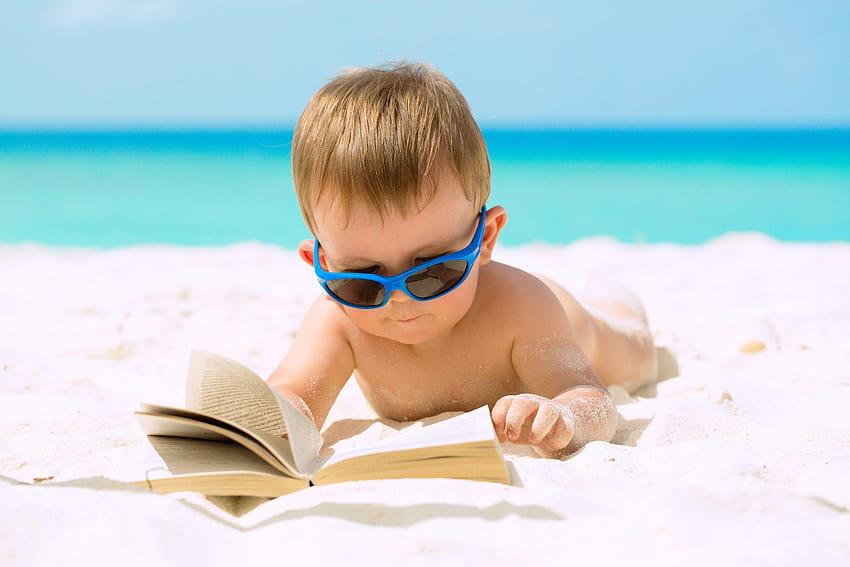

A family outing to the beach has become a nightmare for one Microsoft user in Germany whose accounts were blocked after family photos automatically uploaded to his OneDrive account.

After the account-holder’s sister took photos of her son playing and bathing naked on the beach during a family outing — using the account-holder’s phone that had a better camera than her own — the images automatically uploaded to his Microsoft 365 account. Automated content-scanning systems then flagged the images as suspicious, and automatically locked the account.

As a consequence of the artificial intelligence system misidentifying the images, the man has been unable to gain access to his paid Microsoft 365 account, calendar, contacts, games, and other paid subscriptions for over two years. His other photos, as well as research related to his studies, and his work-related documents, are also inaccessible.

The automated scanning systems also reported the Microsoft customer to police, who questioned him about the supposed child pornography material that had uploaded to OneDrive.

Unbeknownst to many users, such as those facing criminal charges after providing their child’s doctor with photos requested to aid in diagnosing a genital infection, providers such as Google or Microsoft and social networks such as Facebook automatically search for suspicious content, and automatically report suspected infractions to police.

The cases illustrate how precarious the convenience of cloud services really is, and how dangerous it is to use indiscriminate and untargeted searches to protect young people:

Teenagers and other minors who share photos amongst themselves, or who try to send photos to authorities when reporting abuse, are also at risk of artificial intelligence flagging those images as suspicious and automatically reporting the sender — the young person — to police.

The requirement to have platforms and tech companies routinely and proactively scan all images and text to identify, block, and report child sexual abuse material is the focus of EU “chat control” legislation, and a feature of proposed Canadian, UK, and American laws that legislators say is necessary